The radioactivity of rocks has been used for many years to help derive lithologies. Natural occurring radioactive materials (NORM) include the elements uranium, thorium, potassium, radium, and radon, along with the minerals that contain them. There is usually no fundamental connection between different rock types and measured gamma ray intensity, but there exists a strong general correlation between the radioactive isotope content and mineralogy. Logging tools have been developed to read the gamma rays emitted by these elements and interpret lithology from the information collected.

Conceptually, the simplest tools are the passive gamma ray devices. There is no source to deal with and generally only one detector. They range from simple gross gamma ray counters used for shale and bed-boundary delineation to spectral devices used in clay typing and geochemical logging. Despite their apparent simplicity, borehole and environmental effects, such as naturally radioactive potassium in drilling mud, can easily confound them.

Relating radioactivity to rock types

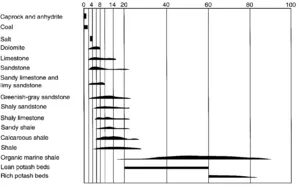

In Fig. 1, the distributions of radiation levels observed by Russell are plotted for numerous rock types. Evaporites (NaCl salt, anhydrites) and coals typically have low levels. In other rocks, the general trend toward higher radioactivity with increased shale content is apparent. At the high radioactivity extreme are organic-rich shales and potash (KCl). These plotted values can include beta as well as gamma radioactivity (collected with a Geiger counter). Modern techniques concentrate on gamma ray detection.

Fig. 1 – Distribution of relative radioactivity level for various rock types

Radioactive isotopes in rocks

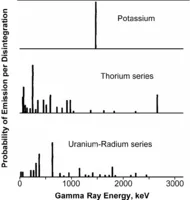

The primary radioactive isotopes in rocks are potassium-40 and the isotope series associated with the disintegration of uranium and thorium. Fig. 2 shows the equilibrium distribution of energy levels associated with each of these groups. Potassium-40 (K40) produces a single gamma ray of energy of 1.46 MeV as it transforms into stable calcium. On the other hand, both thorium (Th) and uranium (U) break down to form a sequence of radioactive daughter products. Subsequent breakdown of these unstable isotopes produces a variety of energy levels. Standard gamma ray tools measure a very broad band of energy including all the primary peaks as well as lower-energy daughter peaks. As might be expected from Fig. 2[3], the total count can be dominated by the low-energy decay radiation.

Fig. 2 – Gamma-ray energy levels resulting from disintegration of unstable isotopes

The radionuclides, including radium, may become more mobile in formation waters found in oil fields. Typically, the greater the ionic strength (salinity), the higher the radium content. Produced waters can have slightly higher radioactivity than background. In addition, the radionuclides are often concentrated in the solid deposits (scale) formed in oilfield equipment. When enclosed in flow equipment (pipes, tanks, etc.) this elevated concentration is not important. However, health risks may occur when equipment is cleaned for reuse or old equipment is put to different application.

Table 1 lists some of the common rock types and their typical content of potassium, uranium, and thorium.

Potassium is an abundant element, so the radioactive K40 is widely distributed (Table 2). Potassium, feldspars and micas are common components in igneous and metamorphic rocks. Immature sandstones can retain an abundance of these components. In addition, potassium is common in clays. Under extreme evaporitic conditions, KCl (sylvite) will be deposited and result in very high radioactivity levels. Uranium and thorium, on the other hand, are much less common. Both U and Th are found in clays (by absorption), volcanic ashes, and heavy minerals.

History of gamma ray tools

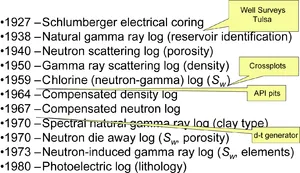

The gamma ray tool was the first nuclear log to come into service, around 1930 (see Fig. 3). Gamma ray logs are used primarily to distinguish clean, potentially productive intervals from probable unproductive shale intervals. The measurement is used to locate shale beds and quantify shale volume. Clay minerals are formed from the decomposition of igneous rock. Because clay minerals have large cation exchange capacities, they permanently retain a portion of the radioactive minerals present in trace amounts in their parent igneous micas and feldspars. Thus, shales are usually more radioactive than sedimentary rocks. The movement of water through formations can complicate this simple model. Radioactive salts (particularly uranium salts) dissolved in the water can precipitate out in a porous formation, making otherwise clean sands appear radioactive.

Fig. 3 – A timeline of nuclear logging highlights the introduction and evolution of commercial nuclear-logging measurements.

Gamma ray logging tool

Before getting into how to use the log readings, let us consider the workings of the tool. Unlike all other nuclear tools (and, in fact, all other logging measurements), it is completely passive. It emits no radiation. Instead, it simply detects incoming gamma rays from the formation and (unfortunately) the borehole. Gamma rays are electromagnetic radiation, generally in the energy range 0.1 to 100 MeV. As light, this would correspond to very short wavelengths indeed. The difference between gamma rays and X-rays is largely semantic because they overlap in energy.

Originally, the detector was a Geiger-Müeller tube, just as in the Geiger counter. More recently, the detectors have been switched to solid-state scintillation crystals such as NaI. When a gamma ray strikes such a crystal, it may be absorbed. If it is, the crystal produces a flash of light. This light is “seen” by a photomultiplier staring into the end of the crystal. The photomultiplier shapes the light into an electrical pulse that is counted by the tool. Hence, like all nuclear tools, the raw measured quantity in a gamma ray log is counts. This means that the precision of gamma ray log measurements is determined by Poisson statistics. The precision is the square root of the total number of counts recorded at a given depth. Counts recorded are basically proportional to the volume of the detector crystal times its density (which determine the probability that a gamma ray will be captured within the crystal) times the length of time counted. As with all nuclear-logging measurements, the only part of this that the logger controls is the counting time. Because log measurements are depth driven, the length of time the logger counts is inversely proportional to the logging speed.

Historically, gamma ray sondes have recorded the total flux of gamma radiation integrated over all energies emanating from a formation as a single count rate, the gamma ray curve. Logging tools are not uniform in their energy sensitivity. No detector responds to all the gamma rays that impinge on it. Many pass through with no effect. The sizes of a detector, the solid angle it subtends, and its thickness, as well as its composition (particularly its density), all affect its efficiency for detecting gamma rays. The tool housing around the detector, the casing, and even the density of the borehole fluid can all filter the gamma rays coming from the formation. All these factors not only lower the overall tool efficiency, they also lead to variations in efficiency for gamma rays of different energies. In short, the count rate recorded in a particular radioactive shale bed is not a unique property of the shale. It is a complex function of tool design and borehole conditions as well as the actual formation’s radioactivity.

Common scale

Even though gamma ray readings are generally used only in a relative sense, with reservoir (clean) and shale values determined in situ, there are advantages to a common scale. In the US and most places outside the former Soviet Union, gamma ray logs are scaled in American Petroleum Institute (API) units. This harkens back to a desire to compare logs from tools of different designs. Tools with different detector sizes and compositions will not have the same efficiency and thus will not give the same count rate even in the same hole over the same interval. To provide a common scale, API built a calibration facility at the U. of Houston. It consists of a concrete-filled pit, 4 ft in diameter, with three 8-ft beds penetrated by a 5 1/2-in. hole cased with 17-lbm casing. The top and bottom beds are composed of extremely-low-radioactivity concrete. The middle bed was made approximately twice as radioactive as a typical midcontinent US shale, resulting in the zone containing 13 ppm uranium, 24 ppm thorium, and 4% potassium. The gamma ray API unit is defined as 1/200 of the difference between the count rate recorded by a logging tool in the middle of the radioactive bed and that recorded in the middle of the nonradioactive bed.

While it has served fairly well for more than 40 years, this is a poor way to define a fundamental unit. Different combinations of isotopes, tool designs, and hole conditions may give the same count rate, so the calibration does not transfer very far from the calibration-pit conditions. In contrast, Russian gamma ray logs are typically scaled in microroentgens (μR)/hr, which does correspond to a specific amount of radiation. Converting this to API units is a bit vaguely defined, but it is often suggested that the conversion factor is 1 μR/hr = 10 API units for Geiger tube detectors, but 15 μR/hr = 10 API for scintillation detectors. This falls in with the previous discussion of the many factors that can affect gamma ray readings. The problem is further aggravated in logging-while-drilling (LWD) measurements. The API unit provides a degree of standardization, but despite the best efforts of tool designers, one cannot expect tools of different designs to read exactly the same under all conditions. Fortunately, none of this is very important because gamma ray measurements are generally used only in a relative way.

Factors affecting readings

Because we use gamma ray logs as relative measures, precise calibration is not very important except as a visual log display feature. Environmental effects are much more important. Consider a radioactive volume of rock traversed by a borehole. Nuclear physics tells us that gamma rays are absorbed as they pass through the formation. For typical formations, this limits the depth of investigation to approximately 18 in. Considering only the geometry, the count rate opposite a given rock type will be much lower in a larger borehole in which the detector is effectively farther from the source of gamma rays. In an open hole, borehole size almost always has the greatest effect on the count-rate calibration. This problem can go well beyond changes in bit size. Especially if shales or sands are selectively washed out, borehole size can imprint itself of the expected gamma ray contrast between shales and sands. If the borehole is large enough, the density of the fluid filling the borehole can also impact the calibration by absorbing some of the gamma rays before they get to the tool.

Barite in the mud is another complication, filtering the incoming gamma rays. Thus, the gamma ray borehole size and fluid corrections are often very important and should be made if at all possible. Obviously, casing absorbs a large fraction of the gamma rays traversing it on their way to the borehole, so if the tool is run in a cased hole, casing corrections are very important. Tool design has a large impact on environmental corrections. The housing and location of the detectors all filter the incoming gamma rays. It is important to use the right environmental corrections for the tool being run. This is especially true for LWD tools that may consist of multiple detectors embedded in large, heavy drill collars that filter the incoming gamma rays in unique ways.

Comments are closed.